On-Device AI for Dynamic Gaming

Finetuning approaches like Low-Rank Adaptation (LoRA) enable Small Language Models (SLMs) to perform at or above Cloud LLM performance on specific tasks. Our work today will be to build on-device code unlocking that performance for our gamers, regardless of hardware.

The core challenge for our Edge ML Engineers is to build, manage, and optimize the systems that make this possible. This technical interview is designed to simulate a core task you'd face here: taking a model and implementing a flexible, resource-efficient system to manage its behavior at runtime.

The Challenge: Building a Dynamic LoRA System

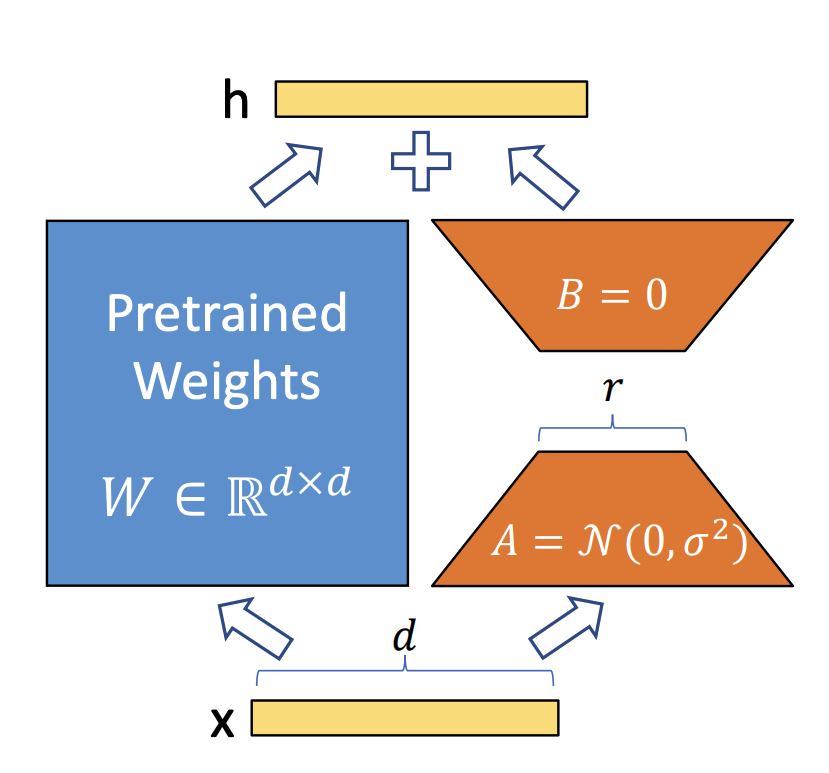

Your mission is to build a Rust-based system for managing Low-Rank Adaptation (LoRA) layers. LoRAs are small "adapter" weights that can modify a base model's behavior. For our game, this could mean swapping a character's personality, applying a new art style, or on-demand models for unique user generated content. You will implement the core logic to load, activate, combine, and manage these adapters in memory.

The Exercises: A Step-by-Step Build

The interview is structured as a series of four exercises incrementally building on each other. For each step, you'll be provided with a runnable example file that defines the target API; your goal is to implement the underlying logic to make the example compile and run successfully.

| Part | Title | Goal |

|---|---|---|

| 1 | Base Example Runs | A warm-up exercise to verify your environment is set up. You'll simply compile and run a pre-written example that loads a base model from a `.safetensors` file. |

| 2 | A Single LoRA Adapter | Implement the fundamental logic for a `LoraLinear` layer. You will fill in the `load_adapter` and `forward` methods, and the top-level model API to manage it. |

| 3 | Dynamic Adapter Management | Extend the system to manage the lifecycle of multiple adapters. You will implement the logic to unload adapters from memory and rescale their influence on the fly. |

| 4 | Supporting Multiple Layer Types | Generalize the library to support LoRA for `Conv1d` and `Embedding` layers. You will then implement the final `KitchenSinkModel` to selectively apply adapters based on a configuration file's "target_modules" array. |

What We're Looking For

This exercise is designed as a collaborative, pair-programming session. We're interested in seeing how you approach problems, structure your code, and communicate your thought process. We encourage you to lead the way, use the development workflow and tools you're most comfortable with (including AI assistants and StackOverflow). Be prepared to answer line-by-line questions for any code additions from external sources. The goal is to simulate a real-world development task, not to quiz you on memorized facts or language syntax.

Prep Work

To set yourself up for success, we highly recommend you complete the following steps before the interview:

-

1

Unpack the Project & Set Up Your Environment

Ensure you have a working Rust environment. Unpack the provided repository and run the first exercise (`cargo run --example 1_load_base_model -p lora-exercise`) to confirm everything is configured correctly.

-

2

Verify Your Setup

Make sure exercise 1 compiles and runs successfully. Contact us early if there are any issues during this process.

-

3

Review the LoRA Concept

We recommend reading the abstract and looking at the diagrams in the original LoRA paper: LoRA: Low-Rank Adaptation of Large Language Models.

-

4

Prepare Your Development Environment

You are welcome to use any workflow you're comfortable with, including AI coding assistants. Please have your preferred Rust development environment set up and be prepared to explain any code you write or generate.

-

5

Brush Up on Key Rust Concepts

The exercise will involve common Rust patterns. A quick review of Traits/Structs, HashMap/Vec, Result for error handling, and shared state (e.g. Arc<Mutex<T>>) may be helpful.